How Fraudsters Capitalize on New Technology—and What Companies Can Do About it

Get ahead, or get left behind

Get ahead, or get left behind

New technology gets the people going. Just ask the folks coughing up a fair sum of cash for an Apple Vision Pro. Sure, these users may look like Splinter Cell operatives with their VR goggles on but, most likely, Apple’s foray into “spatial computing” will take off sooner rather than later.

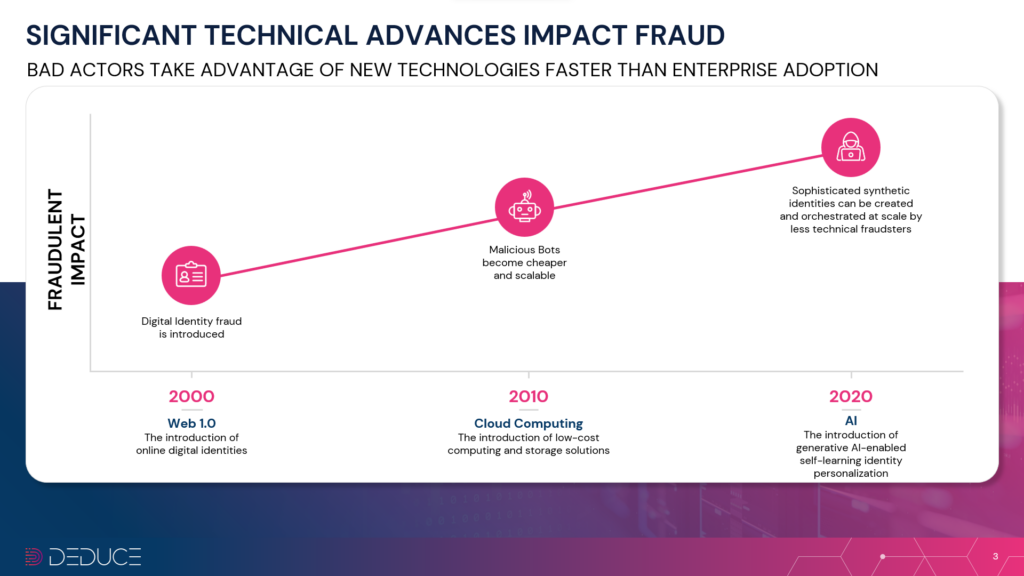

However, before everyday users and even large enterprises can adopt new technologies, another category of users is way ahead of them: fraudsters. These proactive miscreants adopt the latest tech and find new ways to victimize companies and their customers. Think metaverse and crypto fraud or, most recently, the use of generative AI to create legions of humanlike bots.

Look back through the decades and a clear pattern emerges: new tech = new threat. Phishing, for example, was the offspring of instant messaging and email in the mid-1990s. Even the “advance fee” or “Nigerian Prince” scam we associate with our spam folders originally cropped up in the 1920s due to breakthroughs in physical mail.

What can we learn from studying this troubling pattern? How can businesses adopt the latest wave of nascent technologies while protecting themselves from opportunistic fraudsters? In answering these questions, it’s helpful to examine the major technological advancements of the past 20+ years—and how bad actors capitalized at every step along the way.

The 2000s

The 2000s ushered in digital identities and, by extension, digital identity fraud.

Web 1.0 and the internet had exploded by the early aughts. PCs, e-commerce, and online banking increased the personal data available on the web. As more banks transitioned to online, and digital-only banks emerged, fintech companies like PayPal hit the ground running and online transactions skyrocketed. Fraudsters pounced on the opportunity. Phishing, Trojan horse viruses, credential stuffing, and exploiting weak passwords were among the many tricks that fooled users and led to breaches at notable companies and financial institutions.

Phishing scams, in which bogus yet legitimate-looking emails persuade users to click a link and input personal info, took off in the 2000s and are even more effective today. Thanks to AI, including A-based tools like ChatGPT, phishing emails are remarkably sophisticated, targeted, and scalable.

Social media entered the frame in the 2000s, too, which opened a Pandora’s box of online fraud schemes that still persist today. The use of fake profiles provided another avenue for phishing and social engineering that would only widen with the advent of smartphones.

The 2010s

The 2010s were all about the cloud. Companies went gaga over low-cost computing and storage solutions, only to go bonkers (or broke) due to the corresponding rise in bot threats.

By the start of the decade, Google, Microsoft, and AWS were all-in on the cloud. The latter brought serverless computing to the forefront at the 2014 re:Invent conference, and the two other big-tech powerhouses followed suit. Then came the container-sance, the release of Docker and Kubernetes, the mass adoption of DevOps and hybrid and multicloud and so on. But, in addition to their improved portability and faster deployment, containers afforded bad actors (and their bots) another attack surface.

The rise of containers, cloud-native services, and other cloudy tech in the 2010s led to a boom in innovation, efficiency, and affordability for enterprises—and for fraudsters. Notably, the Mirai botnet tormented global cloud services companies using unprecedented DDoS (distributed denial of service) attacks, and the 3ve botnet accrued $30 million in click-fraud over a five-year span.

Malicious bots had never been cheaper or more scalable, brute force and credential stuffing attacks more seamless and profitable. The next tech breakthrough would catapult bots to another level of deception.

The 2020s

AI has blossomed in the 2020s, especially over the past year, and once again fraudsters have flipped the latest technological craze into a cash cow.

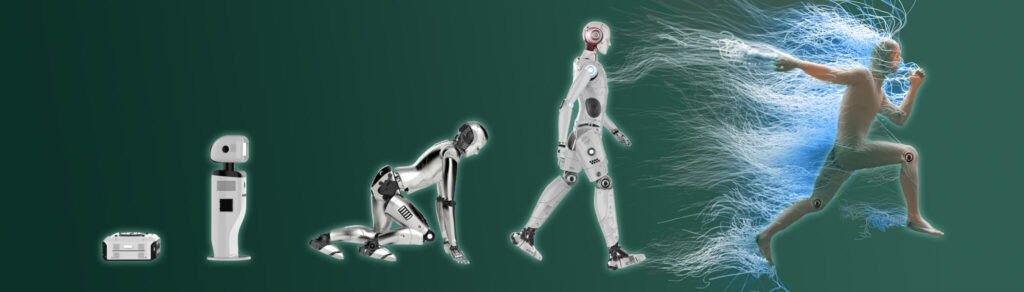

Amid the ongoing AI explosion, bad actors have specifically leveraged Generative AI and self-learning identity personalization to line their pockets. It’s hard to say what’s scarier—how human these bots appear, or how easy it is for novice users to create them. The widespread availability of data and AI’s capacity to teach itself using LLMs (large language models) has spawned humanlike identities at massive scale. Less technical fraudsters can easily build and deploy these identities thanks to tools like WormGPT, otherwise known as “ChatGPT’s malicious cousin.”

The most nefarious offshoot of AI’s golden age may be SuperSynthetic™ identities. The most humanlike of the synthetic fraud family tree, SuperSynthetics are all about the long con and don’t mind waiting several months to cash out. These identities, which can deepfake their way past account verification if need be, are realistically aged and geo-located with a legit credit history to boot, and they’ll patiently perform the online banking actions of a typical human to build trust and credit worthiness. Once that loan is offered, the SuperSynthetic lands its long-awaited reward. Then it’s on to the next bank.

Like Web 1.0 and cloud computing before it, AI’s superpowers have amplified the capabilities of both companies and the fraudsters who threaten their users, bottom lines and, in some cases, their very existence. This time around, however, the threat is smarter, more lifelike, and much harder to stop.

What now?

There’s undoubtedly a positive correlation between the emergence of technological trends and the growth of digital identity fraud. If a new technology hits the scene, fraudsters will exploit it before companies know what hit them.

Rather than getting ahead of the latest threats, many businesses are employing outdated mitigation strategies that woefully overlook the SuperSynthetic and stolen identities harming their pocketbooks, users, and reputations. Traditional fraud prevention tools scrutinize identities individually, prioritizing static data such as device, email, IP address, SSN, and other PII data. The real solution is to analyze identities collectively, and track dynamic activity data over time. This top-down strategy, with a sizable source of real-time, multicontextual identity intelligence behind it, is the best defense against digital identity fraud’s most recent evolutionary phase.

It’s not that preexisting tools in security stacks aren’t needed; it’s that these tools need help. At last count, the Deduce Identity Graph is tracking nearly 28 million synthetic identities in the US alone, including nearly 830K SuperSynthetic identities (a 10% increase from Q3 2023). If incumbent antifraud systems aren’t fortified, and companies continue to look at identities on a one-to-one basis, AI-generated bots will keep slipping through the cracks.

New threats require new thinking. Twenty years ago phishing scams topped the fraudulent food chain. In 2024 AI-generated bots rule the roost. The ultimatum for businesses remains the same: get ahead, or get left behind.