New Executive Order Doesn’t Sufficiently Address AI-Generated Fraud

Synthetic fraud remains the elephant in the room

Synthetic fraud remains the elephant in the room

The Biden administration’s recent executive order “on Safe, Secure, and Trustworthy Artificial Intelligence” naturally caused quite a stir among the AI talking heads. The security community also joined the dialog and expressed varying degrees of confidence in the executive order’s ability to protect the federal government and private sector against bad actors.

Clearly, any significant effort to enforce responsible and ethical AI use is a step in the right direction, but this executive order isn’t without its shortcomings. Most notable is its inadequate plan of attack against synthetic fraudsters—specifically those created by Generative AI.

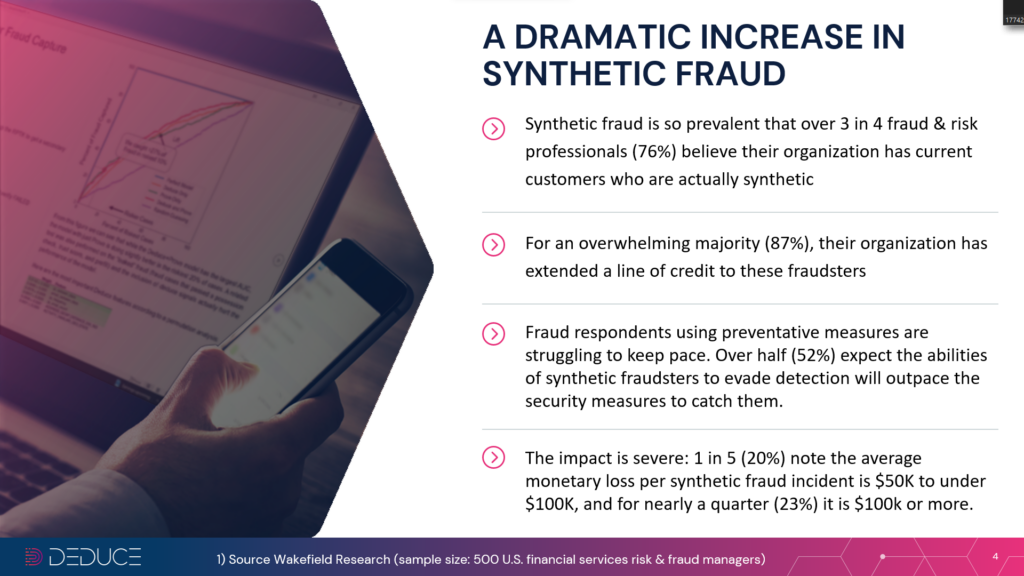

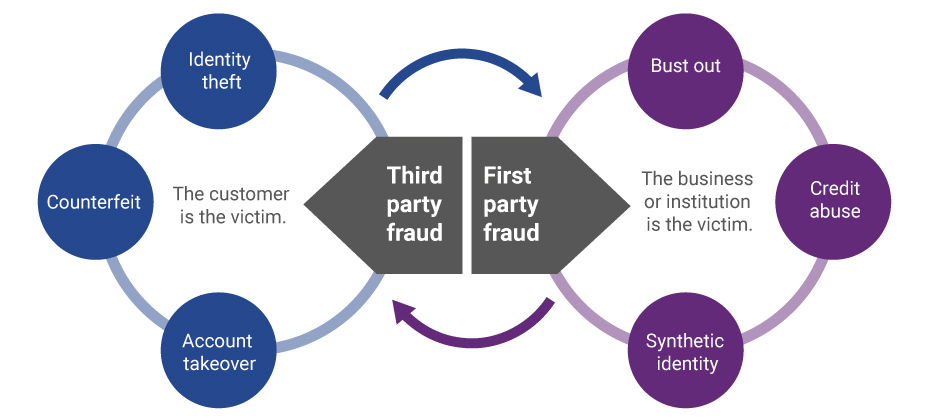

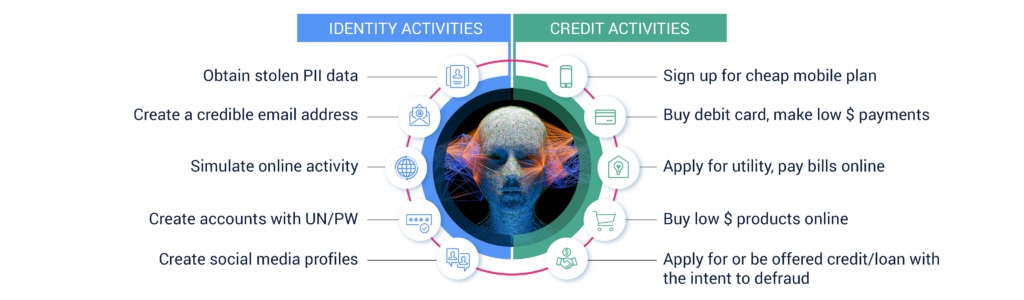

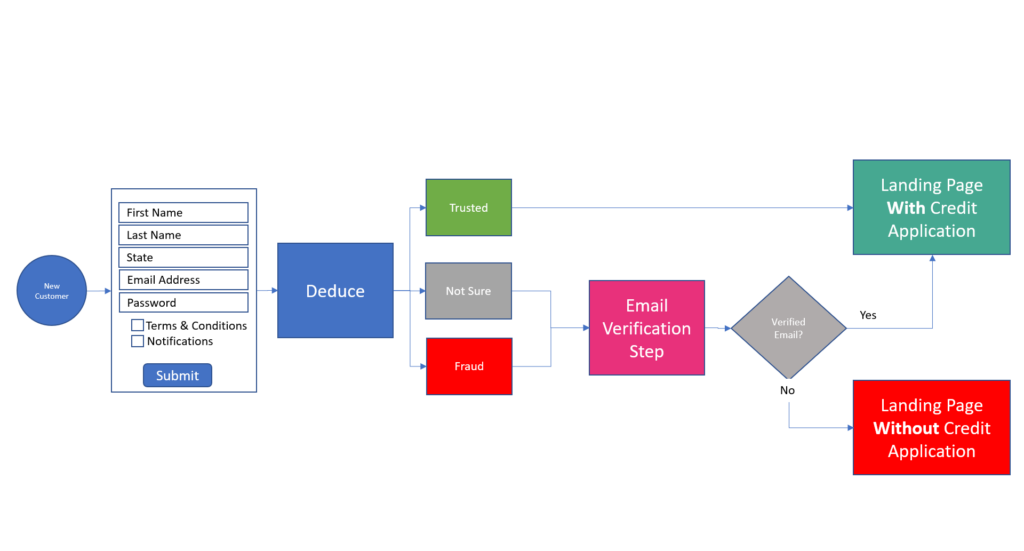

With online fraud reaching a record $3.56 billion through the first half of 2022 alone, financial institutions are an obvious target of AI-based synthetic identities. A Wakefield report commissioned by Deduce found that 76% of US banks have synthetic accounts in their database, and a whopping 86% have extended credit to synthetic “customers.”

However, the shortsightedness of the executive order also carries with it a number of social and political ramifications that stretch far beyond dollars and cents.

Missing the (water)mark

A key element of Biden’s executive order is the implementation of a watermarking system to differentiate between content created by humans and AI, a topical development in the wake of the SAG-AFTRA strike and the broader artist-versus-AI clash. Establishing provenance of an object via a digital image or signature would seem like a sensible enough solution to identifying AI-generated content and synthetic fraud, that is, if all of the watermarking mechanisms currently at our disposal weren’t utterly unreliable.

A University of Maryland professor, Soheil Feizi, as well as researchers at Carnegie Mellon and UC Santa Barbara, circumvented watermarking verification by adding fake imagery. They were able to remove watermarks just as easily.

It’s also worth noting that the watermarking methods laid out in the executive order were developed by big tech. This raises concerns around a walled-garden effect in which these companies are essentially regulating themselves while smaller companies follow their own set of rules. And don’t forget about the fraudsters and hackers who, of course, will gladly continue using unregulated tools to commit AI-powered synthetic fraud, as well as overseas bad actors who are outside US jurisdiction and thus harder to prosecute.

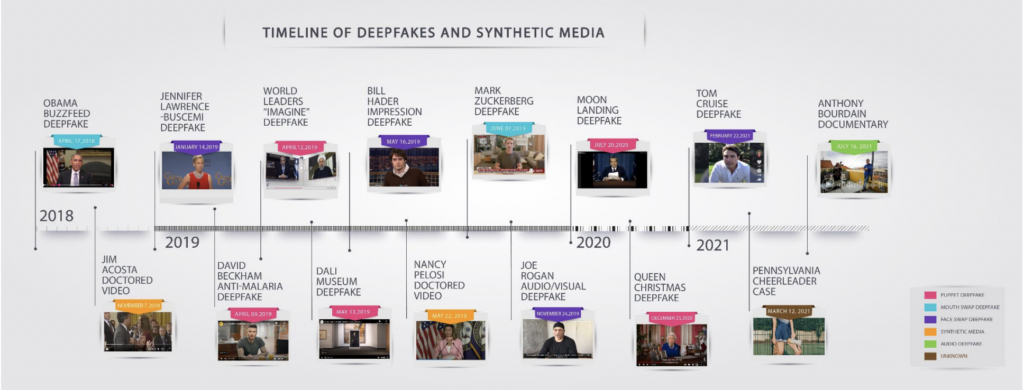

The deepfake dilemma

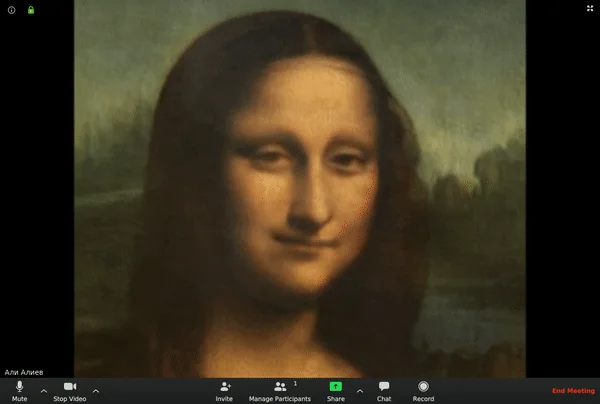

Another element of many synthetic fraud attacks, deepfake technology, is addressed in the executive order but a clear-cut solution isn’t proposed. Deepfaking is as complex and democratized as ever—and will only grow more so in the coming years—yet the executive order falls short of recommending a plan to continually evolve and keep pace.

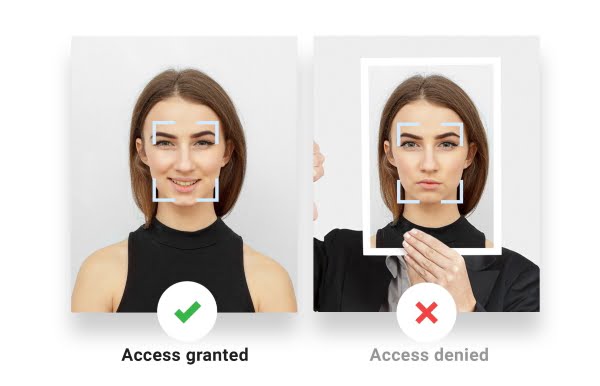

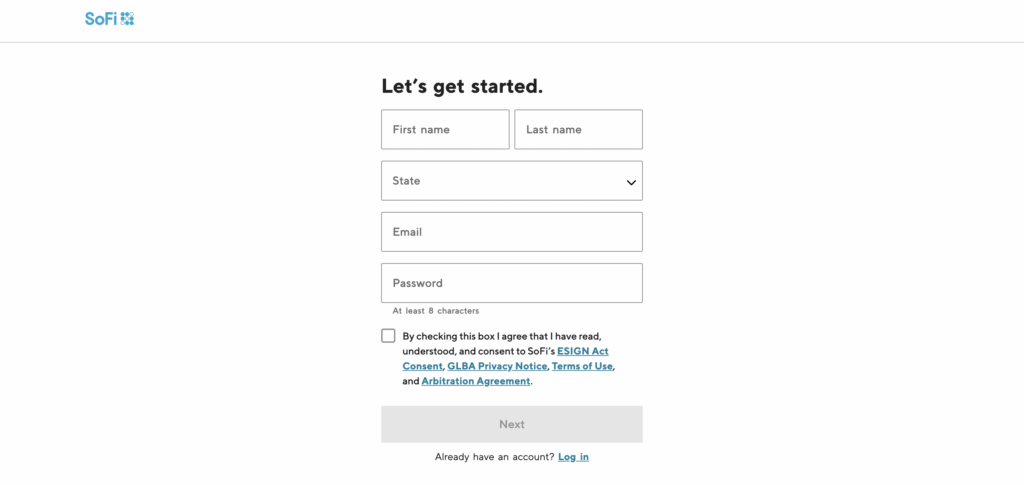

Facial recognition verification is employed at the government and state level, but even novice bad actors can use AI to deepfake their way past these tools. Today, anyone can deepfake an image or video with a few taps. Apps like FakeApp can seamlessly integrate someone’s face into an existing video, or generate an entirely new one. As little as a cropped face from a social media image can spawn a speaking, blinking, head-moving entity. Uploaded selfies and live video calls pass with flying colors.

In this era of remote customer onboarding, coinciding with unprecedented access to deepfake tools, it behooves executive orders and other legislation to offer a more concrete solution to deepfakes. Finservs (financial services) companies are in the crosshairs, but so are social media platforms and their users; the latter poses its own litany of dangers.

Synthetic fraud: multitudes of mayhem

The executive order’s watermarking notion and insufficient response to deepfakes don’t squelch the multibillion-dollar synthetic fraud problem.

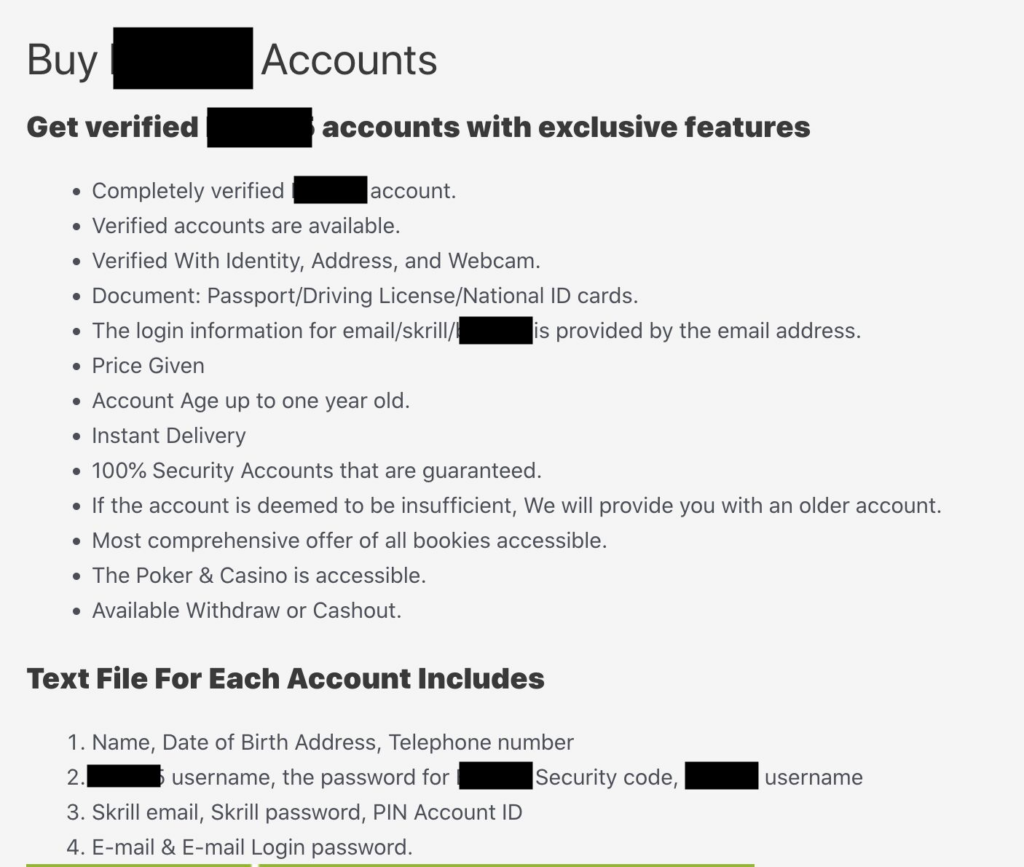

Synthetic fraudsters still have the upper hand. With Generative AI at their disposal, they can create patient and incredibly lifelike SuperSynthetic™ identities that are extremely difficult to intercept. Worse, “fraud-as-a-service” organizations peddle synthetic mule accounts from major banks, and also sell synthetic accounts on popular sports betting sites—new, aged, geo-located—for as little as $260.

More worrisome, amid the rampant spread of disinformation online, is the potential for synthetic accounts to cause social panic and political upheaval.

Many users struggle to identify AI-generated content on X (formerly Twitter), much less any other platform, and social networks charging a nominal fee to “verify” an account offers synthetic identities a cheap way to appear even more authentic All it takes is one post shared hundreds of thousands or millions of times for users to mobilize against a person, nation, or ideology. A single doctored image or video could spook investors, incite a riot, or swing an election.

“Election-hacking-as-a-service” is indeed another frightening offshoot of synthetic fraud, to the chagrin of politicians (or those on the wrong side of it, at least). These fraudsters weaponize their armies of AI-generated social media profiles to sway voters. One outfit in the Middle East interfered in more than 33 elections.

Banks or betting sites, social uprisings or rigged elections, unchecked synthetic fraud, buttressed by AI, will continue to wreak havoc in multitudinous ways if it isn’t combated by an equally intelligent and scalable approach.

The best defense is a good offense

The executive order, albeit an encouraging sign of progress, is too vague in its plan for stopping AI-generated content, deepfakes, and the larger synthetic fraud problem. The programs and tools it says will find and fix security vulnerabilities aren’t clearly identified. What do these look like? How are they better than what’s currently available?

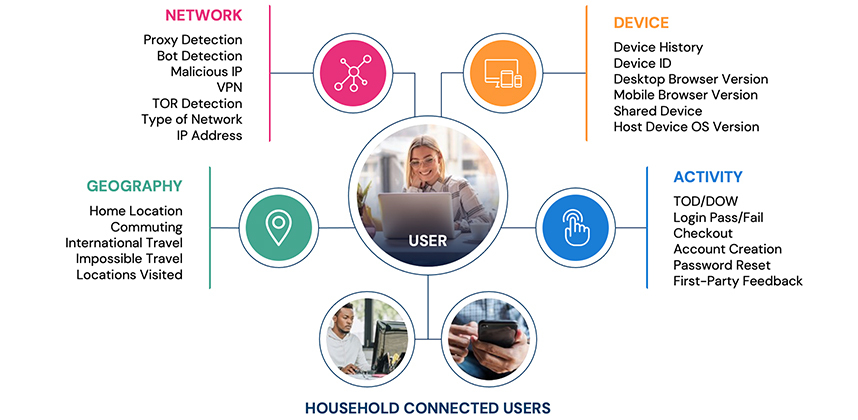

AI-powered threats grow smarter by the second. Verbiage like “advanced cybersecurity program” doesn’t say much; will these fraud prevention tools be continually developed so they’re in lockstep with evolving AI threats? To its credit, the executive order does mention worldwide collaboration in the form of “multilateral and multi-stakeholder engagements,” an important call-out given the global nature of synthetic fraud.

Aside from an international team effort, the overarching and perhaps most vital key to stopping synthetic fraud is an aggressive, proactive philosophy. Stopping AI-generated synthetic and SuperSynthetic identities requires a preemptive, not reactionary, approach. We shouldn’t wait for authenticated—or falsely authenticated—content and identities to show up, but rather stop synthetic fraud well before infiltration can occur. And, given the prevalence of synthetic identities, they should have a watermark all their own.