AI-Generated Identity Fraud Vs. Real-Time Identity Intelligence. Who Wins?

How a top-down approach can unmask AI-generated fraudsters

How a top-down approach can unmask AI-generated fraudsters

Whomever’s side of the AI debate you’re on there’s no denying that AI is here to stay, and has barely started to tap its potential.

AI makes life easier on consumers and businesses alike. However, the proliferation of AI-based tools helps fraudsters as well.

As the AI arms race heats up, one emerging threat that’s tormenting businesses is AI-generated identity fraud. With help from generative AI, fraudsters can easily use previously acquired PII (Personal Identifiable Information) to establish a credible online identity that appears human-like, replete with an OK credit history, then leverage deepfakes to legitimize a synthetic identity with documents, voice, and video. As of April 2023, audio and video deepfakes alone have duped one-third of companies..

Without the proper fortification in place, financial services and fintech businesses are prime targets for AI-generated identities, new account opening fraud, and the resultant revenue loss.

The (multi)billion-dollar question is, how do these companies fight back when AI-generated identities are seemingly indistinguishable from real customers?

Playing the long game

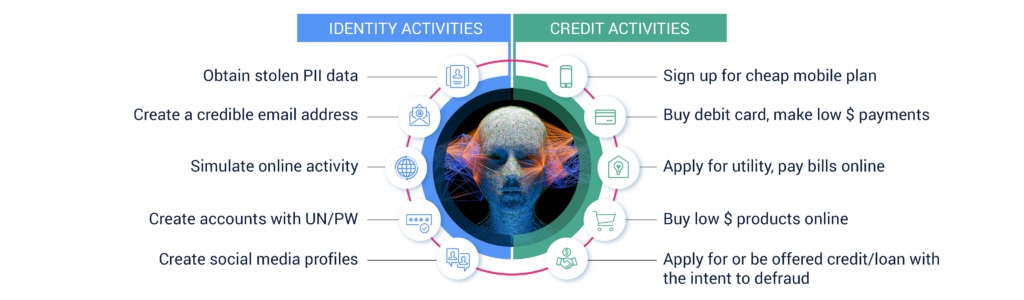

There are several ways in which AI helps create synthetic identities.

For one, social engineering and phishing with AI-powered tools is as easy as “PII.” Generative AI can crank out a malicious yet convincing email or deepfake a document or voice to obtain personal info. In terms of scalability, fraudsters can now manage thousands of fake identities at once thanks to AI-assisted CRMs and marketing automation software and purpose-built platforms for committing fraud such as FraudGPT and WormGPT. Thousands of synthetics creating “aged” and geo-located email addresses, signing up for newsletters, and making social media profiles and other accounts—all on autopilot. This unparalleled sophistication is the hallmark of an even more formidable synthetic identity: the SuperSyntheticTM identity.

Thanks to AI’s automation and effective utilization of previously stolen PII data, SuperSynthetic identities can assemble a credible trail of online activity. But these SuperSynthetics have a credible (maybe not an 850 but a solid 700) credit history, too. Therein lies the other challenge with AI-generated identity fraud: the human bad actors behind the computer or phone screen, pulling the strings, are remarkably patient. They’ll invest actual money by making deposits over time into a newly opened bank account, or make small purchases on a retailer’s website to build “existing customer” status, to gradually forge a bogus identity that lands them North of $15K (according to the FTC, a net ROI of thousands of dollars). AI-generated fraud is a very profitable business.

The chart above shows how a fraudster boosts credibility for an identity both online and with credit history before opening a credit card or loan, or even transacting via BNPL (Buy Now Pay Later). They sign up for cheap mobile phone plans, such as Boost, Mint, or Cricket, or make small pre-paid debit card donations to charities linked to their social security number. They can even use AI to find rental vacancies in MLS listings in a geography that maps to their aged and geo-located legend, in order to establish an online activity history of paying utility bills. The patience, calculation, and cunning of these fraudsters is striking—and just as dangerous as the AI that fuels their SuperSynthetic identities.

Looking at the big picture

Neutralizing AI-generated identity fraud requires a new approach. Traditional bot mitigation and synthetic fraud prevention solutions reliant upon static data about a single identity need some extra oomph to stonewall persuasive SuperSynthetics.

These static data-based tools lack the dynamic, real-time data and scale necessary to pick up the scent of AI-generated identity fraud. Patterns and digital forensic footprints get overlooked, and the sophistication of these fake identities even outflanks manual review processes and tools like DocV.

The bigger problem is that, when today’s anti-fraud solutions pull data from a range of sources during the verification phase, they’re doing so on an individual identity basis. Why is this problematic? Because a SuperSynthetic identity on its own will look legitimate and pass all the verification checks—including a manual review, the last bastion of fraud prevention. However, analyzing that same identity from a high-level vantage point changes everything. The identity is revealed to be a member of a larger signature of SuperSynthetic identities. Like a black light, this bird’s-eye view uncovers previously obscured, digital forensic evidence.

But what does this evidence even look like? And what does it take to transition from an individualistic to a signature-centered approach?

The key to the evidence locker

AI-generated SuperSynthetic identities leave behind a variety of digital fingerprints or signatures. A top-down view reveals suspicious patterns across millions of fraudulent identities that are too identical to be a coincidence.

For example, if the same three identities post a comment on the New York Times website every Tuesday morning at 7:32 a.m. PST, the chances these are three humans are infinitesimally small and therefore it’s clear that each is in fact SuperSynthetic.

Switching over to a top-down approach isn’t merely a philosophical change. Unlocking the requisite evidence to thwart AI-generated identities demands premium identity intelligence at scale, combined with sophisticated ML that gathers and analyzes large swaths of real-time data from diverse sources.

In short, an activity-based, real-time identity graph capable of sifting through hundreds of millions of identities.

Protect your margins (and UX)

A ginormous real-time identity graph rivaling the likes of big tech? This may seem like an unrealistic path to stopping AI-generated identities. It isn’t.

Deduce employs the largest identity graph in the US: 780 million US privacy-compliant identity profiles and 1.5 billion daily user events across 150,000+ websites and apps. Additionally, Deduce has previously seen 89% of new users at the account creation stage—where AI-generated synthetics typically pass through undetected—and 43% of these users hours before they enter the new account portal.

Deduce’s premium identity intelligence, patented technology, and formidable ML algorithms enable a multi-contextualized, top-down approach. Identities are analyzed against signatures of synthetic fraudsters—hundreds of millions of them—to ensure they’re the real McCoy. It’s a far superior alternative to overtightening existing risk models and causing unnecessary friction followed by churn, reputational harm, and revenue loss.

Want to outsmart AI-generated identity fraud while preserving a trusted user experience? Contact us today.