Deepfake Digest: Elvis, Dating Apps, & the 2024 Olympics

No person or institution is safe from Gen AI fraud

No person or institution is safe from Gen AI fraud

As AI-generated fraud continues to proliferate, so do the use cases demonstrating its deceptive, humanlike behavior. Automated and highly intelligent SuperSynthetic™ identities, driven by Gen AI and deepfake technology, are defrauding banks, colleges, elections and any other institution or person they can make a pretty penny off of.

Deloitte expects Gen AI fraud to cost US banks $40B by 2027, up from $12.3 billion in 2023 (a 32% increase). This prognostication, which bodes negatively for industries outside of banking as well, isn’t as bold as it sounds. Just this year, a fraudster posing as a deepfaked CFO convinced a Hong Kong finance worker to wire nearly $26M.

AI-generated posts and comments are just as dangerous for businesses, though “sweeping” legislation (e.g., the EU Digital Services Act) fails to adequately address this threat. Meanwhile, tools such as FraudGPT, designed to write copy used in phishing attacks, can whip up social media posts that enrage a specific audience with the goal of fostering engagement and advertising revenue.

The increased frequency of Gen AI fraud should make it a top priority for cybersecurity teams. From the upcoming Olympic games, to The King of Rock and Roll himself, here are some recent examples of Gen AI fraudsters wreaking havoc—and how businesses can protect themselves from similar attacks.

Elvis has not left the building

Elvis may be spared from the threat of Gen AI fraud, but his legendary Graceland mansion isn’t so lucky.

Last month, Graceland, now a museum, was reportedly awaiting foreclosure. Forged documents claimed the late Lisa Marie Presley (Elvis’ daughter) took out a $3.8M loan from a lending company later determined to be bogus, and had used Graceland as collateral.

This is a high-profile example of home title fraud in which bad actors pretend to be homeowners. After finding a suitable mark—someone elderly, recently deceased, or similarly vulnerable—fraudsters try to refinance or sell the house and cash out.

Fortunately, a judge stopped the sale of Graceland before the fake “Naussany Investments and Private Lending” company could profit from its clever caper. But the scheme, carried out by a prominent dark web fraudster with “a network of ‘worms’ placed throughout the United States,” shows how easy it is to deepfake IDs, documents, and signatures—even those of public figures.

Swiping right on dating app fraud

Dating apps are a hotbed, so to speak, for catfishing and pig butchering. While Gen AI has boosted the effectiveness of both scams, pig butchering—when a fraudster slowly builds rapport with a victim before asking them for money—is the most worrisome.

There were 64K confirmed romance scams in the US last year, totaling $1.14B in losses. This number is likely even higher because many victims, ashamed of being suckered out of thousands of dollars or cryptocurrency, don’t come forward.

Swindling unsuspecting dating app users is easier than ever thanks to—you guessed it—deepfakes. Photos, audio, and video can all be AI-generated. AI-powered chatbots are practically indistinguishable from humans, and fraudsters can also leverage AI to deploy fake profiles at massive scale, all on autopilot. Background-checking a suspected fake user is unlikely to work for a variety of reasons; among them, large language models (ChatGPT, Gemini, etc.) convincingly build out social media profiles, and the unique AI profile pictures won’t appear via reverse-image-search.

Dating app fraud, obviously a significant user experience detractor for these businesses as well, inspired a popular 2024 Netflix documentary: Ashley Madison: Sex, Lies & Scandal. The documentary details how Gen AI chatbots on the titular dating app build credibility by showing familiarity with hotspots within a victim’s zip code. Perhaps the doc’s biggest claim is that 60% of Ashley Madison profiles are fake.

Russia “medals” with Paris Olympics

Russia, barred from competing in this year’s Olympics because of the war in Ukraine, used Gen AI and deepfakes to retaliate against the International Olympic Committee. Their goal: smear the committee’s reputation, and stoke fear of a potential terrorist attack at the Games to dissuade fans from attending.

Most notably, Russian bad actors posted a fake, disparaging online documentary (“Olympics Has Fallen”) and implied that Netflix had backed the production. To further legitimize the documentary, they generated bogus glowing reviews from The New York Times and other prominent news outlets, and used deepfaked audio from Tom Cruise to suggest his involvement and support.

The follow-up to this cringeworthy tactic was a series of fake news reports spreading more disinformation about the Olympics. A knockoff reproduction of a French newscast reported that nearly a quarter of purchased tickets for the Games in Paris had been returned due to fears of a terrorist attack. Another video, falsely attributed to the CIA and a French intelligence agency, urged spectators to avoid the Olympics because of, again, potential terrorism.

Russia’s deepfaked “medaling” with the Olympics is yet another example of why no organization or individual is safe from Gen AI-based fraud. So, what’s the fix?

Catch it early, or never at all

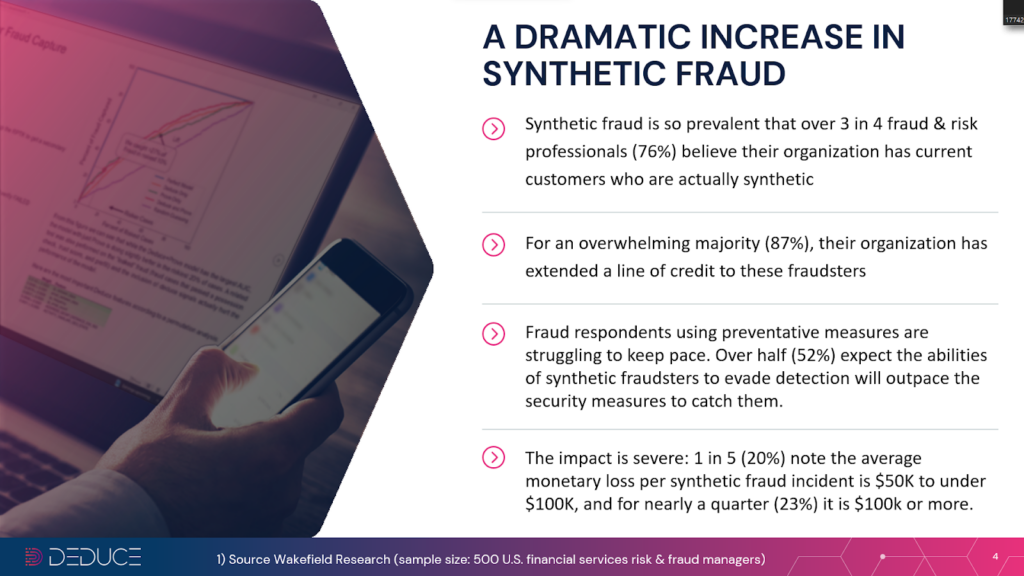

It’s never been more imperative to fortify synthetic fraud defenses, as these “Frankenstein” identities—stitched together using real and fake PII (Personally Identifiable Information)—are now SuperSynthetic identities with Gen AI and deepfakes at their disposal. It’s no wonder fraud is up 20% this year, with synthetics comprising 85% of all fraud cases.

Uphill as the battle might seem, the “early bird gets the worm” adage offers hope for finservs and other businesses threatened by Gen AI fraud. In addition to preemptive detection (prior to account creation), neutralizing Gen AI fraud—including SuperSynthetics—requires a heap of real-time, multicontextual, activity-backed identity intelligence.

Obtaining the requisite identity intelligence to stop Gen AI fraud is a tall task for any company not named Google or Microsoft. But Deduce’s infrastructure, and unique fraud prevention strategy, is plenty tall enough.

Deduce’s “signature” approach differentiates itself from traditional antifraud tools that hunt fraudsters one by one. By looking at identities from a bird’s-eye view, or collectively, Deduce recognizes patterns of behavior that typify SuperSynthetics. Despite the humanlike nature and extreme patience of SuperSynthetic identities (they often “play nice” for months before striking), they aren’t perfect. Spotting multiple identities that perform social media or banking activities at the same day and time every week, for example, rules out the possibility of coincidence.

Cross-referencing these behavioral patterns with trust signals such as device, network, and geolocation, further roots out SuperSynthetic bad apples. Deduce’s trust scores are 99.5% accurate, so companies can rest assured knowing any identity deemed legit has been seen with recency and frequency on the Deduce Identity Graph. And if Deduce hasn’t seen an identity across its network, fraud teams can flag with confidence.

When it comes to Gen AI fraud and the SuperSynthetic identities it empowers, no person or institution is safe. Music icons, dating apps, the Olympic Games, finservs, you name it, are all susceptible to being deepfaked or otherwise bamboozled by a fake human. Any chance of fighting back demands preemptive, real-time identity intelligence. Catch ‘em early, or users, bottom lines, and reputations are in for a rude awakening.