Fake Humans are Causing Real (Big) Problems

76% of finservs are victims of synthetic fraud

76% of finservs are victims of synthetic fraud

In 1938, Orson Welles’ infamous radio broadcast of The War of the Worlds convinced thousands of Americans to flee their homes for fear of an alien invasion. More than 80 years later, the public is no less gullible, and technology unfathomable to people living in the 1930s allows fake humans to spread false information, bamboozle banks, and otherwise raise hell with little to no effort.

These fake humans, also known as synthetic identities, are ruining society in myriad ways: tampering with electorate polls and census data, disseminating misleading social media posts with real-world consequences, sharing fake articles on Reddit that subsequently skew Large Language Models that drive platforms such as ChatGPT. And, of course, bad actors can leverage fake identities to steal millions from financial institutions.

The bottom line is this: synthetic fraud is prevalent; financial services companies (finservs), social media platforms, and many other organizations are struggling to keep pace; and the impact, both now and in the future, is frighteningly palpable.

Here is a closer look at how AI-powered synthetic fraud is infiltrating multiple facets of our lives.

Accounts for sale

If you need a new bank account, you’re in luck: obtaining one is as easy as buying a pair of jeans and, in all likelihood, just as cheap.

David Maimon, a criminologist and Georgia State University professor, recently shared a video from Mega Darknet Market, one of the many cybercrime syndicates slinging bank accounts like Girl Scout Cookies. Mega Darknet and similar “fraud-as-a-service” organizations peddle mule accounts from major bank brands (in this case Chase) that were created using synthetic identity fraud, in which scammers combine stolen Personally Identifiable Information (PII) with made-up credentials.

But these cybercrime outfits take it a step further. With Generative AI at their disposal, they can create SuperSyntheticTM identities that are incredibly patient, lifelike, and difficult to catch.

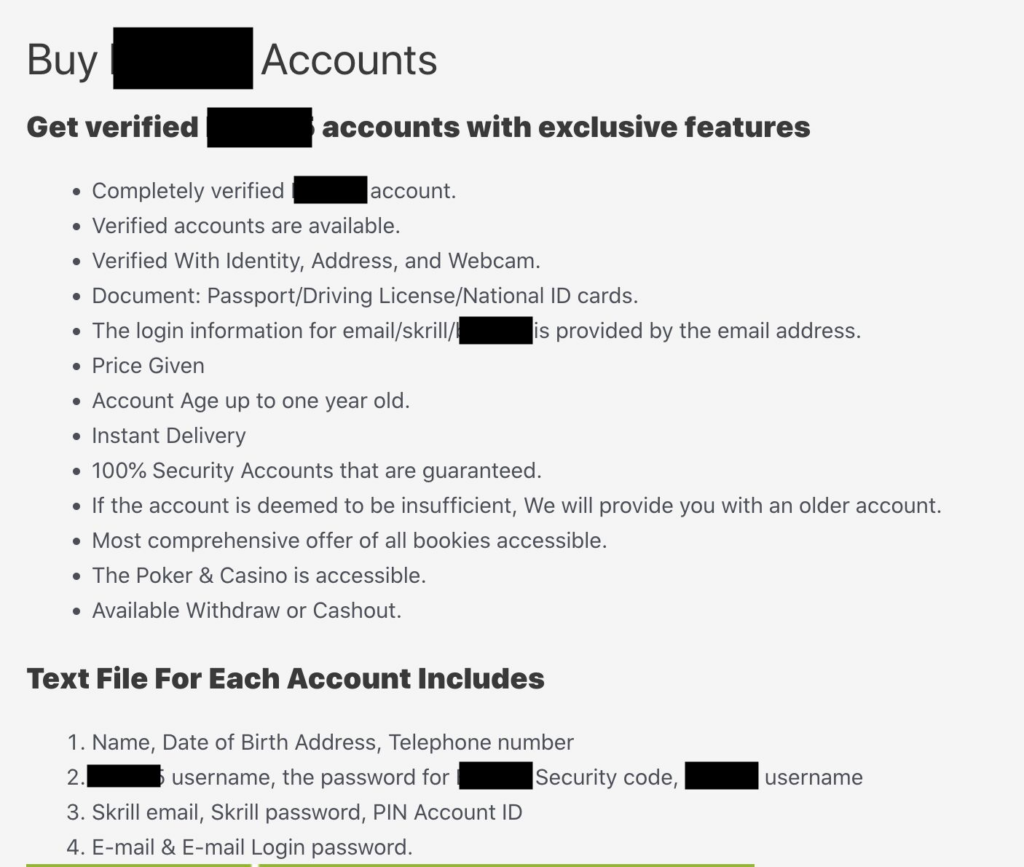

Aside from bank accounts, fraudsters are selling accounts on popular sports betting sites. The verified accounts—complete with name, DOB, address, and SSN—can be new or aged and even geo-located, with a two-year-old account costing as little as $260. Perfect for money launderers looking to wash stolen cash.

Cyber gangs like Mega Darknet also offer access to the very Generative AI tools they use to create synthetic accounts. This includes deepfake technology which, besides fintech fraud, can help carry out “sextortion” schemes.

X-cruciatingly false

Anyone who’s followed the misadventures of X (formerly Twitter) over the past year, or used any social media since the late 2010s, knows that Elon’s embattled platform is a breeding ground for bots and misinformation. Generative AI only exacerbates the problem.

A recent study found that X users couldn’t distinguish AI-generated content (GPT-3) from human-generated content. Most alarming is that these same users trusted AI-generated posts more than posts from real humans.

In the US, where 20% of the population famously can’t locate the country on a world map, and elsewhere these synthetic accounts and their large-scale misinformation campaigns pose myriad risks, especially if said accounts are “verified.” It wouldn’t take much to incite a riot, or stoke anger and subsequent violence toward a specific group of people. How about sharing a bogus picture of an exploded Pentagon that impacts the stock market? Yep. That, too.

Election-hacking-as-a-service

Few topics are more timely and can rile up users like election interference, another byproduct of the fake human—and fake social media—epidemic. Indeed, the spreading of false information in service of a particular political candidate or party existed well before social media, but now the stakes have increased exponentially.

If fraud-as-a-service isn’t ominous-sounding enough, election-hacking-as-a-service might do the trick. Groups with access to armies of fake social media profiles are weaponizing disinformation to sway elections any which way. Team Jorge is just one example of these election meddling units. Brought to light via a recent Guardian investigation, Team Jorge’s mastermind Tal Hanan claimed he manipulated upwards of 33 elections.

The rapid creation and dissemination of fake social media profiles and content is far more harmful and widespread with Generative AI in the fold. Flipping elections is one of the worst possible outcomes, but grimmer consequences will arise if automated disinformation isn’t thwarted by an equally intelligent and scalable solution.

Finservs in the crosshairs

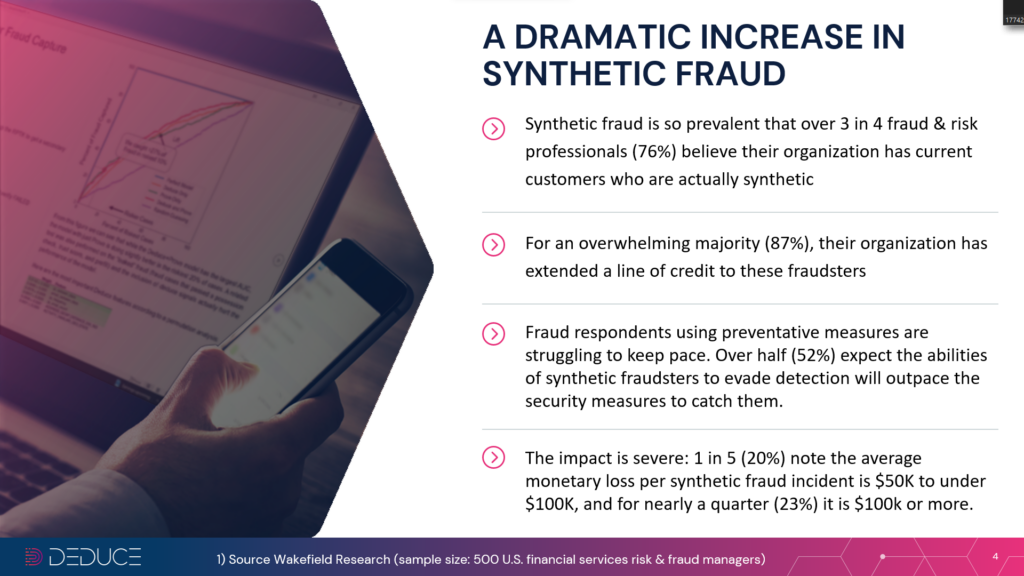

Cash is king. Synthetic fraudsters want the biggest haul, even if it’s a slow-burn operation stretched out over a long period of time. Naturally, that means finservs, who lost nearly $2 billion to bank transfer or payment fraud last year, are number one on their hit list.

Most finservs today don’t have the tools to effectively combat AI-generated synthetic and SuperSynthetic fraud. First-party synthetic fraud—fraud perpetrated by existing “customers”—is rising thanks to SuperSynthetic “sleeper” identities that can imitate human behavior for months before cashing out and vanishing at the snap of a finger. SuperSynthetics can also use deepfake technology to evade detection, even if banks request a video interview during the identity verification phase.

It’s not like finservs are dilly-dallying. In a study from Wakefield, commissioned by Deduce, 100% of those surveyed had synthetic fraud prevention solutions installed along with sophisticated escalation policies. However, more than 75% of finservs already had synthetic identities in their customer databases, and 87% of those respondents had extended credit to fake accounts.

Fortunately for finservs and others trying to neutralize synthetic fraud, it’s not impossible to outsmart generative AI. With the right foundation in place—specifically a massive and scalable source of real-time, multicontextual, activity-backed identity intelligence—and a change in philosophy, even a foe that grows smarter and more humanlike by the second can be thwarted.

This philosophical change is rooted in a top-down, bird’s-eye approach that differs from traditional, individualistic fraud prevention solutions that examine identities one by one. A macro view, on the other hand, sees identities collectively and groups them into a single signature which uncovers a trail of digital footprints. Behavioral patterns such as social media posts and account actions rule out coincidence. The SuperSynthetic smokescreen evaporates.

Whether it’s bad actors selling betting accounts, social media platforms stomping out disinformation, or finservs protecting their bottom lines, fake humans are more formidable than ever with generative AI and SuperSynthetic fraud at their disposal. Most companies seem to be aware of the stakes, but singling out bogus users and SuperSynthetics requires a retooled approach. Otherwise, revenue, users, and brand reputations will dwindle, and the ways in which fake accounts wreak havoc will multiply.